Taking the 4K Challenge: GTX 1070 SLI on Z170 and X99

Introduction

We’ve published a lot of articles over the years looking at CPU performance in games, such as a showdown between the Core i7-4790K and Core i7-5820K. In that comparison, we found the two CPUs to be quite evenly matched, with the six-core 5820K surprisingly not pulling ahead in most games. And most recently, we published an analysis of how Intel’s Core i5-6600K, Core i7-6700K, and Core i7-6900K do when paired up with a hot-clocked GTX 1080 video card.

We’ve published a lot of articles over the years looking at CPU performance in games, such as a showdown between the Core i7-4790K and Core i7-5820K. In that comparison, we found the two CPUs to be quite evenly matched, with the six-core 5820K surprisingly not pulling ahead in most games. And most recently, we published an analysis of how Intel’s Core i5-6600K, Core i7-6700K, and Core i7-6900K do when paired up with a hot-clocked GTX 1080 video card.

But this this is not that article. Rather, here we are going to be looking specifically at whether the X99 platform and its 40 PCIe lanes is a superior pick for enthusiasts running dual video cards, as compared to the 20 PCIe lanes of the Z170 platform. Specifically, the X99 chipset allows high-end video cards access to 16 PCIe lanes each, while the Z170 allows a card access to 16 PCIe lanes only in a single-card configuration. Once you add a second card, those PCIe lanes are dividedly evenly between the two cards, giving each card half as much available bandwidth. Based on this fact, we can hypothesize that for dual-card systems, paying for those extra PCIe lanes may well be worth it. For a a single-card system, however, Z170 may be preferable, even for 4K gaming, because you get access to newer-tech CPUs, specifically Skylake and soon enough Kaby Lake. As has been the case for quite some time, Intel’s enthusiast-level High-End Desktop (HEDT) platform is typically one to two generations behind the current consumer-level platform. Right now, that means HEDT uses the Broadwell-E design, which is 5-8% slower than Skylake in instructions per clock cycle, and also runs at lower default core clocks due to the heat generated by its larger number of cores.

In a sense, we’re building on our findings from several previous articles to provide an even more nuanced look at exactly what determines overall gaming performance in high-end systems. If you’re in the market for a high-end gaming system, and specifically one intended to run at 4K, you’ll want to keep reading! [Update: we’ve since gone a step further and pitted 1070 SLI vs. 1080 SLI vs. the Titan X Pascal at 4K!]

Test Setup

Here are the two GTX 1070 models we used, running GeForce driver version 368.69:

- Asus GeForce GTX 1070 8GB Founders Edition (running at reference clocks)

- EVGA GeForce GTX 1080 8GB Superclocked (detuned to run at reference clocks)

You may ask why we are using unmatched cards here. The answer is two-fold. First, because we buy all of our own video cards at retail to avoid any potential source of bias, it makes sense for us to buy different models, as we’d miss the opportunity to gain exposure to various manufacturers’ offerings, packaging, and warranty support. Secondly, we’ve found that when running two cards in SLI, the ideal cooling setup it to have an open-air card on top, and a blower model on the bottom, so that the top card is not inundated by the lower card’s heat. Unfortunately, this does mean that the two cards will be running at different speeds when using default settings, so we’ve detuned our EVGA Superclocked model with a -88MHz offset to run in step with the Founders Edition card.

With that explanation out of the way, we can move on to the specs for our two test platforms. First our Z170-based system, using Intel’s best quad-core processor:

With that explanation out of the way, we can move on to the specs for our two test platforms. First our Z170-based system, using Intel’s best quad-core processor:

- CPU: Intel Core i7-6700K, overclocked to 4.4GHz

- Motherboard: Gigabyte GA-Z170X-Gaming 6

- RAM: Geil 2x8GB Super Luce DDR4-3000, 15-17-17-35

- SSD #1: Samsung 850 Evo M.2 500GB

- SSD #2: Crucial MX200 1TB

- Case: Phanteks Enthoo Evolv

- Power Supply: EVGA Supernova 850 GS

- CPU Cooler: Noctua NH-U14S

- Operating System: Windows 10

And second, our X99-based system, using Intel’s best eight-core processor:

And second, our X99-based system, using Intel’s best eight-core processor:

- CPU: Intel Core i7-6900K, overclocked to 4.4GHz

- Motherboard: Asus X99-Pro/USB3.1

- RAM: G.Skill 4x8GB Ripjaws4 DDR4-3000, overclocked to DDR4-3200, 16-16-16-36

- SSD #1: Samsung 950 Pro M.2 512GB

- SSD #2: Samsung 850 Evo 1TB

- Case: SilverStone Primera PM01

- Power Supply: EVGA Supernova 1000 PS

- CPU Cooler: Corsair Hydro H100i v2

- Operating System: Windows 10

A few comments on overclocking here. First, the 6700K comes from the factory at a much higher clock speed than the 6900K (4.0GHz base vs. 3.2GHz base). In practice, the difference isn’t quite that much, because under a full load, the 6700K stays at 4GHz (boost only occurs on single-threaded workloads, and even then it only jumps to 4.2GHz). The 6900K in fact boosts to 3.5GHz with a full load, and 3.7GHz with a single-threaded load. To even out the mismatch as much as possible, we overclocked both CPUs to 4.4GHz, which is pretty easy on the 6700K, and near the limit for the 6900K. Even so, the Skylake architecture used by the 6700K is a bit more efficient per clock cycle than the 6900K’s Broadwell-E design, meaning the 6700K has a slight advantage, at least for single-threaded workloads (like some physics routines in games, which cannot be split between cores). Some may argue that an “equal” overclock would have pushed both CPUs by the same percentage or taken each to its maximum overclock, but we had to draw the line somewhere, so we decided to just set them at the same clocks. Another minor point: because the X99 platform can’t run DDR4-3000 memory without an oddball 125MHz motherboard strap, we overclocked our RAM to DDR4-3200 on the X99 system to allow it to run at an even 100MHz motherboard strap.

The good news is that all of this really doesn’t matter, for two reasons: (1) we’re going to be pushing our video cards so hard that CPU limitations will become essentially non-existant, and (2) our core findings will be based on a PCIe scaling analysis, showing how much performance boost each of our platform gets jumping from one card to two. This is based entirely on the platform’s PCIe lanes as long as CPU limitations are kept at bay. We’ll be doing a CPU shootout soon enough to uncover the value of extra cores and Hyperthreading, but this is not that article!

For our testing, we’re using two benchmark tests and four games: 3DMark Fire Strike Ultra, 3DMark Time Spy, Crysis 3, Battlefield 4, Far Cry 4, and The Witcher 3. Fire Strike Ultra is a 4K-specific benchmark, so we didn’t need to tweak any settings for it to do the job we needed. Time Spy is actually a 2560×1440 benchmark, but as you’ll see, it pushes systems pretty hard by harnessing DirectX 12, and we didn’t see a need to change the default setting from 1440p to 4K. As for our four game tests, we pushed every button and toggled every switch, selecting what you might call “ludicrous” quality for each benchmark run. That meant full multi-sampling anti-aliasing (which is overkill at 4K), the highest texture resolutions, and in Far Cry 4 and The Witcher 3, every Nvidia Gameworks feature available. You want max settings, we’re giving you max settings! Just one exception to all of this: Crysis 3 is so demanding at maximum 8x MSAA that we chose to instead run 4x MSAA for our benchmarks, as we couldn’t actually get a clean run through our real-world benchmark on a single GTX 1070 using 8x MSAA. And to be clear, you most definitely do not need it at 4K anyway.

OK, now that we’ve explained the method to our madness, it’s time to show you the results!

The Benchmarks

3DMark

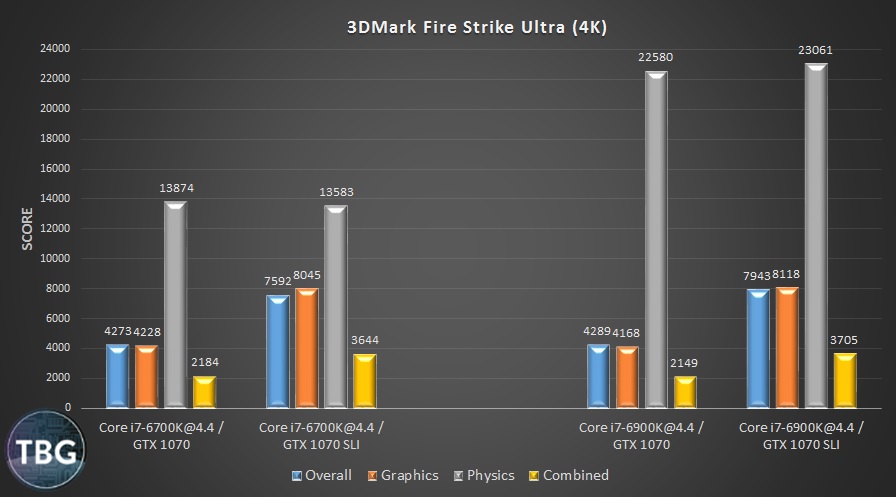

As always, we start with baseline data from 3DMark, which is useful to confirm that SLI is working, and also provides some goalposts for what to expect in terms of SLI scaling.

In this graph, focus your attention on the orange bars. They signify the Graphics Score, which is what we really care about when it comes to the performance of the GTX 1070 video cards in our systems. Note that in a single-card configuration, the 6700K on the Z170 platform actually outperforms the 6900K on the X99 platform by about 1.5%. That’s within the margin of error, but when looked at in tandem with the dual-card configurations, where the 6900K on the X99 board wins by 1%, we start to see a hint of the benefits of more bandwidth. Remember, when using a single card, both platforms can provide 16 PCIe lanes. Double up on cards and the 6700K can only provide 8 lanes to each card. That, in a nutshell, is why we see the flip in the standings.

Looked at another way, in terms of PCIe efficiency, the 6700K allows 90% scaling when jumping from one card to two, while the 6900K allows 95% scaling. Remember, this is not CPU limited, it’s chipset limited. While the 6900K can put out a massive amount of Physics performance, that doesn’t affect the Graphics Score.

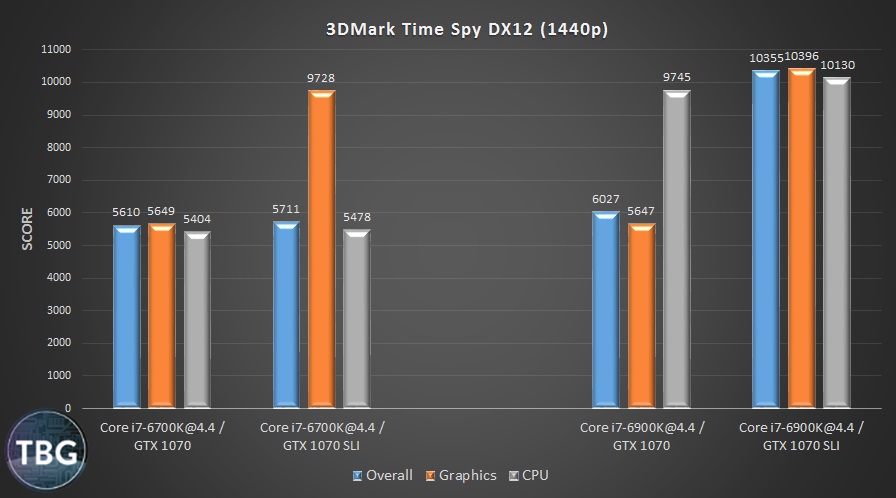

3DMark Time Spy

Time Spy is a sign of things to come in PC gaming. Its advanced graphics engine has monumentally more draw calls than Fire Strike. According to 3DMark, this new benchmark throws five times more graphics content at the system to process. So while the 6700K only allows our GTX 1070 cards to offer a 72% boost in SLI, the 6900K allows 85% scaling in SLI. That means we see a virtual tie with a single 1070 to the 6900K being 7% ahead in SLI.

Note that due to the complex ways in which DirectX 12 allows this kind of jump in performance, we aren’t quite sure whether the Graphics Score is affected by CPU power. We’ll be doing more CPU-centric testing in the near future to try to determine exactly what’s at the heart of performance in Time Spy.

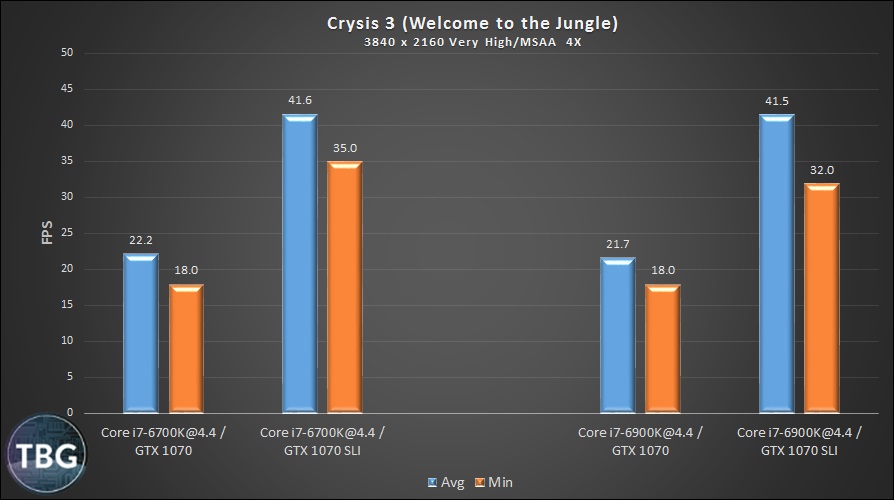

Crysis 3

Crysis is as close to a universal PC benchmark as we’ve ever seen in an actual PC game. That was true of the original Crysis, a bit less so in the console-focused Crysis 2, but Crysis 3 was another game-changer, so to speak, when introduced in 2013. It was so forward-looking that no system could come close to maxing it out at 1080p when it was released. And yet, now we have a configuration that can max this game out at 4K and make it look and play great! Indeed, this game engine is so smooth that you don’t need more than 40 frames per second to have an ultra-responsive gaming experience.

And interestingly, while this game has traditionally been CPU limited at high settings when running at 1080p or 1440p, pushed to a GPU-crushing 4x MSAA at 4K, nothing but the GPU matters. So our powerful 8-core X99-based system doesn’t get a leg up on its cheaper cousin. The two systems are tied with a single card, and are essentially tied with dual cards as well. We’re a bit surprised that the higher bandwidth available on the 6900K platform doesn’t yield any benefits here, but that may be because the bottleneck at the settings we used isn’t PCIe bandwidth but rather GPU processing power.

All right, let’s now take a look at a handful of other popular PC games to see how our platforms perform when hit with a 4K gaming load.

These benchmarks were all collected in actual runs through the game world, as none of these games has a built-in benchmark. For each of the games, we chose a specific 30-second scene to repeat in identical fashion, collecting three samples for each setup. We found these to be remarkably consistent, so we simply averaged all three to arrive at a final number.

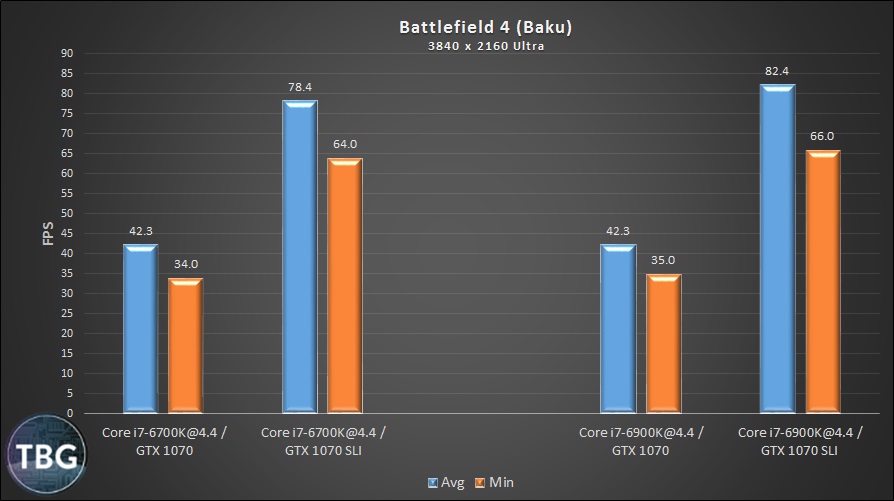

Battlefield 4

These benchmarks were all collected in actual runs through the game world, as none of these games has a built-in benchmark. For each of the games, we chose a specific 30-second scene to repeat in identical fashion, collecting three samples for each setup. We found these to be remarkably consistent, so we simply averaged all three to arrive at a final number.

So, with a single GTX 1070, both systems perform identically. Not too surprising given that both are operating at PCIe x16. Drop in a second GTX 1070, and the high-end platform runs away with a big win. Scaling at 95%, which is about the maximum we can expect based on our 3DMark tests, it gets way ahead of the 6700K/Z170 platform, which only scales at 85%. Note that while this game engine does utilize multiple cores, it isn’t at all limited by a 6700K, especially at 4K.

These are the kind of results that will make ultra-high-end builders happy, but can the X99 platform keep this lead going?

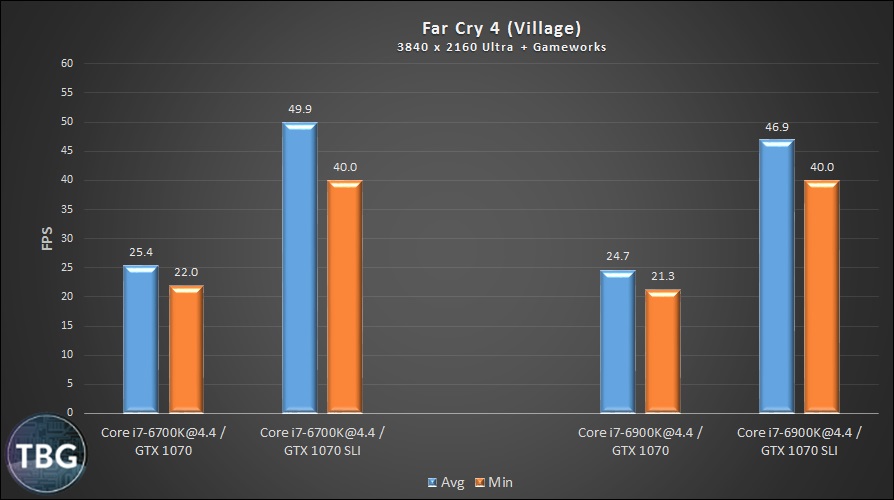

Far Cry 4

Uh, oh, Far Cry 4 teaches the big kid a lesson here. While the two systems are virtually tied with a single GTX 1070, double up on cards and the 6700K/Z170 jumps ahead. We saw this odd behavior before in our Quad vs. Hex Showdown, where a 4790K beat the 5820K at equal clock speeds, despite both being Haswell-based processors. For whatever reason, Far Cry 4 doesn’t take well to the advanced X99 platform. Perhaps there’s some additional driver overhead that’s slowing things down.

The good news is that both SLI setups are totally playable at 4K, whereas it was actually pretty hard for us to get through the benchmarking session with a single GTX 1070. Remember, we pushed this game to the max, using insane settings like MSAA and GameWorks HBAO+ and God Rays. SLI makes this game a real treat at 4K.

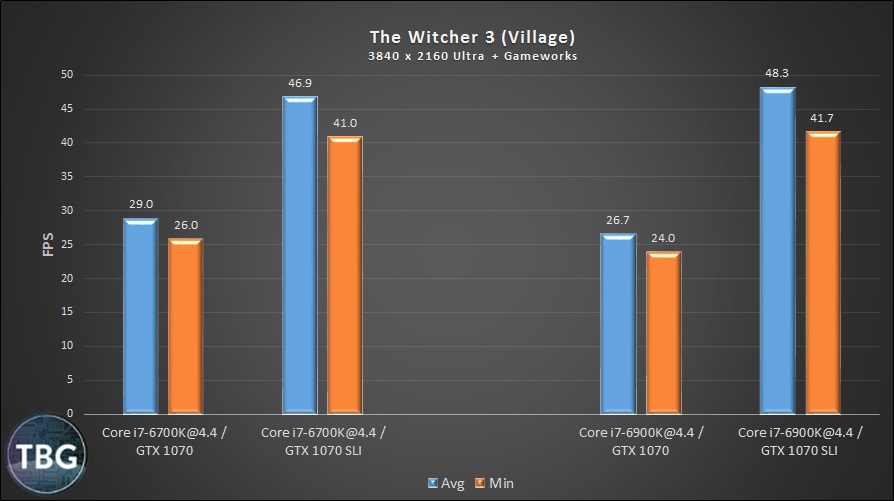

The Witcher 3

The newest game in our suite, The Witcher 3 was a critics’ favorite for 2015. Yes, Fallout 4 won an equal number of game of the year awards, but graphically speaking, The Witcher 3 was way, way ahead. And it’s the finest example of what a game will do when given nearly unlimited PCIe bandwidth. In a single-card configuration, we see the big-boy platform stumble, losing by 9%. Add a second card, and it’s now ahead by 3%. Looked at another way, GTX 1070 SLI scales at 81% on X99 and just 62% on Z170. If ever there were a poster child for the benefits of big bandwidth, this is it.

Note that this game requires very little CPU power, especially when pushing a 4K resolution, anti-aliasing, and Nvidia HairWorks, which draws massively on GPU power. And again, this game went from choppy and close to unplayable on a single GTX 1070 to incredibly-smooth with GTX 1070 SLI.

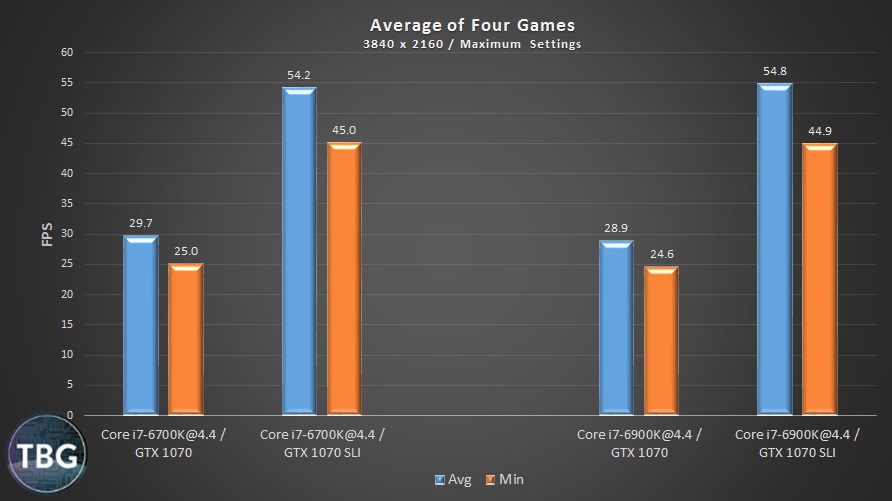

Average Results & PCIe Scaling

As shown on the last page, some games show great benefits of running on SLI on the X99 platform, others perform equally well (or better!) running SLI on the Z170 platform. The best way we know how to sort all this out is to present an average of the results. And here, we see the two platforms ending up very, very close. But what isn’t quite as close is the scaling of SLI. X99 provides 89% scaling, while Z170 provides 80% scaling. If you’re dropping serious money on a dual-card gaming system, a few percentage points really matter, because it means you’re getting the maximum benefit from your investment.

Now, looking just at the numbers above, we might conclude the X99 platform used by the Core i7-6900K really isn’t worth it, because even with dual cards, it’s only 1% ahead. But going with Intel’s HEDT platform also means you’re going to be able to harness the power of six-, eight-, and ten-core processors, which matter a whole lot in productivity and professional apps, and can also make a difference in a handful of cutting-edge game engines. Our 3DMark Time Spy DX12 results earlier in this article provides an insight into what DX12-based games might be able to achieve on ultra-high-end systems.

Conclusion

There are two main takeaways from our latest round of 4K testing. First of all, if you’re serious about 4K gaming at maximum detail levels, which frankly isn’t necessary to enjoy a 4K resolution, you’re going to want dual cards running in SLI. We also have a GTX 1080 in house, which we’ll be featuring a series of upcoming articles, and we can tell you that it provides a 24% boost over our GTX 1070 samples, on average. This is great, but it’s not the 80-90% boost you get going from a 1070 to 1070 in SLI. If you’re serious about 4K performance, you may want to go with two GTX 1070s for $900 over a single GTX 1080 at $650-$700. And note that GTX 1070 SLI is on average about 10-20% faster than the $1,200 GTX Titan X Pascal. The second takeaway, and the one that’s really central to our original hypothesis, is that the 40 PCIe lanes of the 6900K/X99 platform do indeed allow significantly better SLI scaling than the 6700K/Z170 platform and the 16 PCIe lanes it makes available for video cards.

Take note that not all CPUs on the X99 platform provide the full PCIe bandwidth. The Core i7-6800K only has 28 PCIe lanes, meaning it only allows a 16x/8x configuration in SLI, so one of the two cards is going to be slightly bandwidth constrained, whereas the Core i7-6850K, Core i7-6900K, and ten-core Core i7-6950X all unlock the full 40 PCIe lanes for a 16x/16x configuration.

So, overall, what are our recommendations to gamers looking to run at 4K in 2016? Well, you may be a bit surprised, but we suggest allocating as much of your budget to video cards as possible. That means the following:

- Need a serious 4K gaming system? Run two GeForce GTX 1070 cards in SLI on the Z170 platform.

- What to step-up to even smoother 4K gaming? Then go for two GeForce GTX 1080 cards in SLI on the Z170 platform.

- Want the ultimate setup? Then go for two GeForce GTX 1080 cards in SLI on the X99 platform, which will unlock the full potential of an SLI system.

- Cost no object? Two Titan X Pascal cards on X99 will do the trick!

Our conclusions are based on the fact that choosing even a “low-end” Core i7-6800K CPU and X99 motherboard will cost $150-$200 more than a a 6700K/Z170 setup, and also requires better cooling and a more expensive quad-channel memory configuration. We’d rather see you put that money towards the video cards if 4K gaming is your goal. But please take note: if you want an all-around, all-conquering PC, going with Intel’s HEDT is the only way to go. Despite being based on a slightly-older Broadwell design, it really doesn’t lose much to its Skylake-based cousin, and of course offer the benefit of 50% to 150% more cores, which in the right apps makes a huge difference.

Now one little caveat we have to mention if we’re going to be completely thorough: SLI doesn’t always work. In games that have no SLI profile (typically lower-budget games or some brand-new big-budget games), a single GTX 1080 will beat 1070s in SLI. That makes things just a bit complicated, but all the major games we have in our test suite, including many not included in this article, work just fine in SLI. All the dire “sky is falling” warnings about SLI, which we’ve heard for years, are really out of line, in our opinion. As long as you’re willing to wait for drivers or games to be patched to enable SLI, you’ll be rewarded handsomely.

We hope you’ve learned something new from this in-depth exploration of SLI performance on Intel’s best platforms, and that they’ll inform your next PC purchase. Need help putting together a balanced gaming system that fits your budget? Then check out our PC Buyer’s Guides, updated monthly, and we’ve actually made some big changes to our build recommendations based on the findings in this article.

Updates: we’ve now published two important follow-up articles, our Gaming CPU Shootout, testing the Core i5-6600K, Core i7-6700K, and Core i7-6900K, as well as our massive GTX 1070 SLI vs. GTX 1080 SLI vs. Titan X Pascal 4K Showdown! We strive to bring you answers to all the PC performance questions you won’t find anywhere else. Your use of the product links in our articles helps support this site as well as the retail purchase of all the CPUs and GPUs we utilize in our testing!