The 4K Gaming Showdown: GeForce GTX 1070 SLI vs 1080 SLI vs Titan X Pascal

Introduction

Ever since Nvidia launched its SLI technology in 2004, enthusiasts have debated whether the extra performance it promises makes up for its potential flaws, and indeed whether it actually improves the gaming experience at all. We’ve examined SLI performance a number of times, including most recently an analysis of SLI scaling using dual GeForce GTX 1080 cards and a high-bandwidth SLI bridge. In each of these cases, we found that SLI most definitely boosted performance, providing “next-gen” performance with current-gen hardware.

One factor that has often weighed in favor of investing in SLI is that it’s typically taken up to a year for the fully-realized version of Nvidia’s current architecture to materialize. For example, the original Titan debuted in February 2013, but was based on the Kepler architecture first seen in in May 2012. Likewise, the Maxwell-based Titan X debuted in March of 2015, seven months after Maxwell made its first appearance in the GTX 980. But things changed a bit this year, as Nvidia launched an ultra-high-end card in the form of the all-conquering Titan X Pascal just two months after its GTX 1070 and 1080 arrived. Nvidia probably could have stuck to a schedule similar to the ones it had used before and still kept enthusiasts happy, but instead decided to take advantage of early success with the Pascal design by pushing out its fully-spec’d Titan X Pascal right away. Of course, Nvidia was going to make folks pay for the privilege of the extra performance on tap, releasing the Titan X Pascal at a record-breaking $1,200, the most ever for a single-GPU card. That made the new Titan more expensive than any other gaming configuration on the market, save for dual GTX 1080 cards in SLI, and even then, the cost was similar. Our curiosity was peaked: could the new Titan actually render SLI’d card of the same generation obsolete, or was it just an overpriced cash grab? Well, you’re about to find out!

As we’ve done with every previous processor and video card benchmarking article published on this site, we purchased all the GPU hardware for this article at retail in order to eliminate any potential conflict of interest. Furthermore, because we know full well what it feels like to pay for each product, we won’t be tempted to dismiss cost as a “theoretical” impediment to consumers. It’s a real issue, and gamers looking to maximize performance on a fixed budget must always consider price. In fact, several of the video cards we tested were purchased specifically for this article. If you’d like to support this approach to component testing, please use the product links in any of our articles to make your next tech purchase!

Test Setup

Here are the specs (and a photo) of the system we used for benchmarking:

- CPU: Intel Core i7-6900K, overclocked to 4.3GHz

- Motherboard: Asus X99-Pro/USB3.1

- RAM: Corsair 4x8GB Vengeance LPX DDR4-3200

- SSD #1: Samsung 950 Pro M.2 512GB

- SSD #2: Samsung 850 Evo 1TB

- Case: SilverStone Primera PM01

- Power Supply: EVGA Supernova 1000 PS

- CPU Cooler: Corsair Hydro H100i v2

- Operating System: Windows 10

- Monitor: LG 27UD68-P 27-Inch 4K

And here are the video cards combinations we tested:

- EVGA GeForce GTX 1070 SC 8GB

- Asus GeForce GTX 1070 8GB FE and EVGA GeForce GTX 1070 SC 8GB with EVGA PRO SLI Bridge HB

- EVGA GeForce GTX 1080 SC 8GB

- Dual EVGA GeForce GTX 1080 SC 8GB with EVGA PRO SLI Bridge HB

- Nvidia Titan X Pascal 12GB

Note that while three of our cards were factory-overclocked, all cards were set to run at reference speeds, making this a true apples-to-apples comparison. Any card can be overclocked, and as we’ve found in our testing, Pascal-based GPUs all tend to have around the same amount of OC headroom: 10-15%.

To eliminate system bottlenecks as much as possible, we used the EVGA PRO SLI Bridge HB, which we found in our most recent in-depth look at SLI scaling provides significantly better performance than the older single-link SLI bridge. Furthermore, we used our X99-based benchmarking system, which we determined can increase SLI scaling by as much as 10%, thanks to its greater number of PCIe lanes. Finally, our eight-core Intel Core i7-6900K processor was overclocked to 4.3GHz, the stability limit for most Broadwell-E chips, and our quad-channel RAM ran at DDR4-3200, providing tremendous memory bandwidth.

For our testing, we’re using one synthetic benchmark and eight games, all running at a native 4K resolution: 3DMark Fire Strike Ultra, Crysis 3, Far Cry 4, The Witcher 3, Fallout 4, Rise of the Tomb Raider, DOOM, Battlefield 1, and Watch_Dogs 2. Each game was run with the highest preset available, typically referred to as “Very High” or “Ultra.” Note that in every game other than Battlefield 1, this is actually not the highest setting available, as individual parameters may have additional quality settings beyond Ultra, for example DOOM has a few “Nightmare” settings, and a number of games have extra ambient occlusion settings that aren’t included in any preset. For the sake of making comparisons easy, we decided it wasn’t worth it to max out each individual quality setting. Furthermore, in many cases, doing so would make the games unplayable at 4K.

All game data was collected in actual in-game runs, which often provide totally different (and obviously more relevant) results than canned benchmarks. We used FRAPS to collect data for three 30-second samples of each benchmark on each video card setup, translating to a total of 120 benchmark runs for this article. Trust us when we say that a few were excruciating due to low framerates, particularly our live multiplayer Battlefield 1 runs on a single GTX 1070. Surviving for 30 seconds straight at under 50fps was no mean feat!

OK, now that we’ve explained the method to our madness, it’s time to move on to the results!

Benchmarks

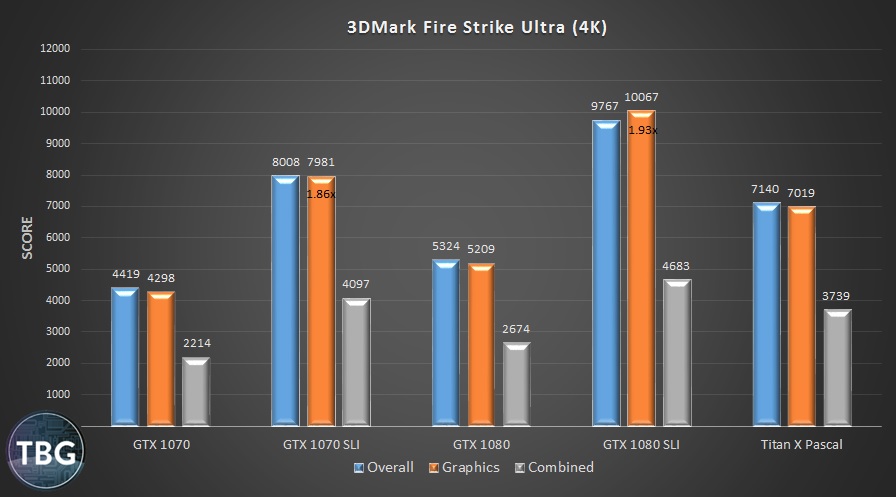

3DMark Fire Strike Ultra

We start with 3DMark as always to provide a baseline for our analysis. Fire Strike Ultra runs natively in 4K, and is quite challenging for any modern system. We’re reporting the Overall, Graphics, and Combined Scores here, dropping the Physics Score, as it’s determined only by the platform, which remained a constant throughout our testing. We’ve provided a scaling number with the Graphics Score for our two SLI systems, as we’ll be doing in all of our other tables in this article. As you can see, GTX 1070 cards in SLI scale at 86%, which is quite good, but the GTX 1080 in SLI hits 93% scaling, which is simply phenomenal. These results put 1070 SLI 14% ahead of the Titan X Pascal, while the 1080 SLI setup is 43% ahead. We’ll soon see if that kind of advantage holds!

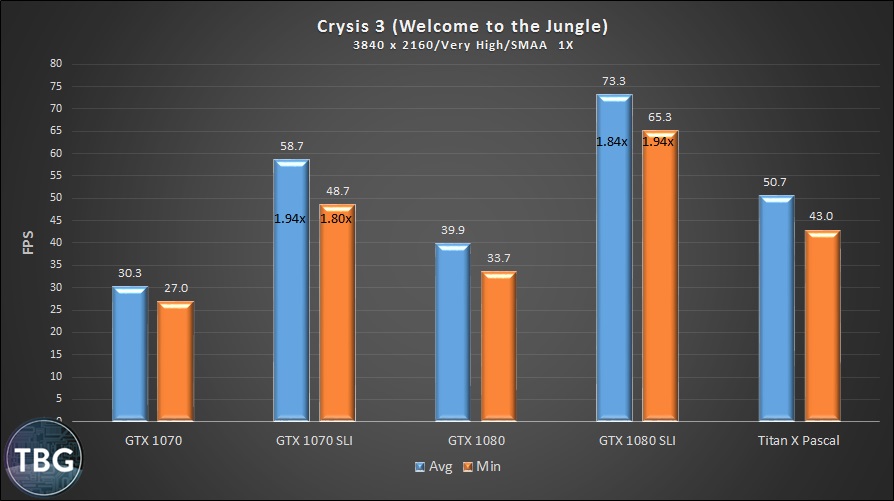

Crysis 3

Crysis is as close to a universal PC benchmark as we’ve ever seen in an actual PC game. That’s why we keep it around, even though it was released way back in 2013. The fact that it can still bring systems to their knees is a testament to the skill of Crytek engineers, who were able to provide a view of the future of PC gaming with their advanced game engine. A shame, then that it looks as if Crytek may be at the end of its rope, with rumors swirling regarding its imminent demise….

In any event, yes, all of our systems can play Crysis in 4K, although the GTX 1070 suffers badly, and it really takes a single GTX 1080 to make this game feel decent. Luckily, SLI scaling was excellent in this well-tuned game, and our SLI setups had no trouble delivering fantastic performance, with the 1070 SLI system 16% ahead of the Titan X Pascal, and the 1080 SLI system 45% ahead. Note that we used minimal anti-aliasing in these benchmarks for two reasons: first, at 4K, it’s really not all that helpful to have extreme anti-aliasing, since there’s little aliasing to begin with, and second, adding a whole lot more AA makes the game unplayable on all of our setups.

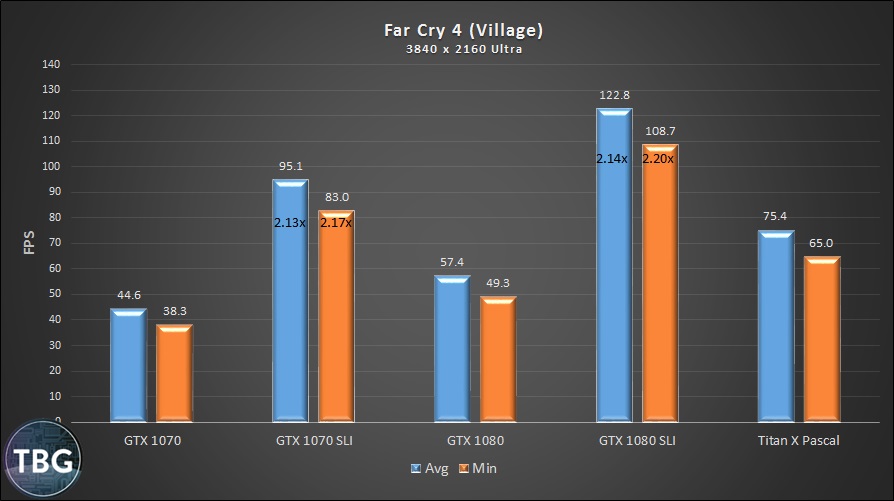

Far Cry 4

Eagle-eyed readers will probably scratch their heads at the results in Far Cry 4, thinking we’ve made some kind of mistake. Indeed, SLI scaling above 100% doesn’t sound possible, but we’ve tested Far Cry 4 in SLI numerous times and always found such “impossible” scaling to occur. There are two potential explanations: SLI eliminates a particular bottleneck that holds back single card performance, or else some graphical setting isn’t actually being applied in SLI. We’ll leave it to you to decide which is more likely, but don’t expect this kind of scaling in any other game! The GTX 1080 SLI system is an absurd 63% faster than the Titan here.

Next we’ll move on to a number of more recent games, all of which pushed the limits of PC performance while also advancing the state of PC gaming generally.

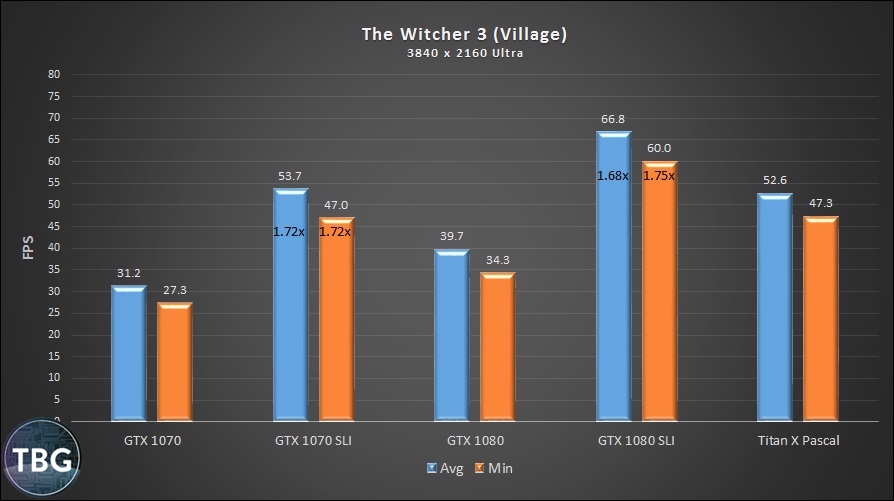

Witcher 3

While a single GTX 1070 is utterly overwhelmed in this game, decent scaling allows the GTX 1070 SLI to break even with the much more expensive Titan X Pascal. And while a single GTX 1080 provides passable performance, two 1080 cards in SLI are fantastic, making this gorgeous game really shine. This setup is 27% ahead of the Titan.

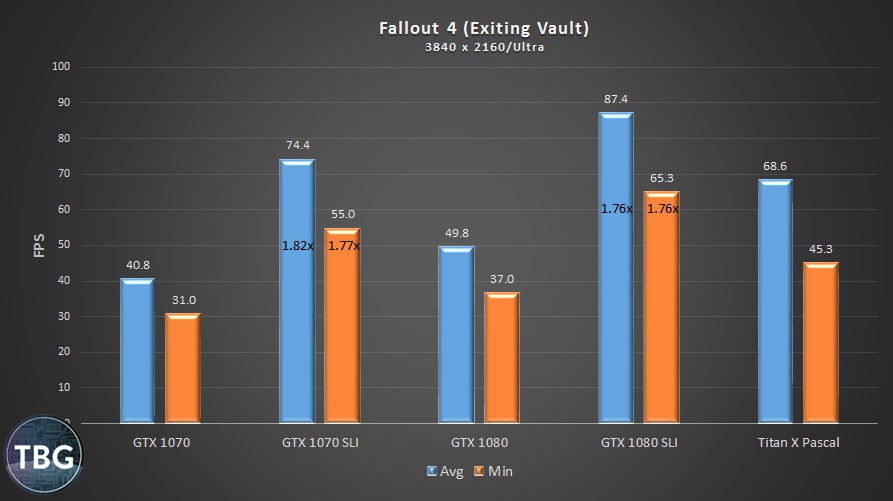

Fallout 4

This game requires tweaks to the Fallout4Prefs.ini file to push past the 60fps frame cap, and tweak we did, because our ultra-high-end solutions were all well above that limit. And SLI scaled quite well, allowing the GTX 1070 in SLI to jump 8% ahead of the Titan X Pascal, while the GTX 1080 SLI setup was 27% ahead. In reality, though, it’s the Titan that is most impressive here, offering 38% more performance than a single GTX 1080, a bigger advantage than it had in any other game in our benchmark suite.

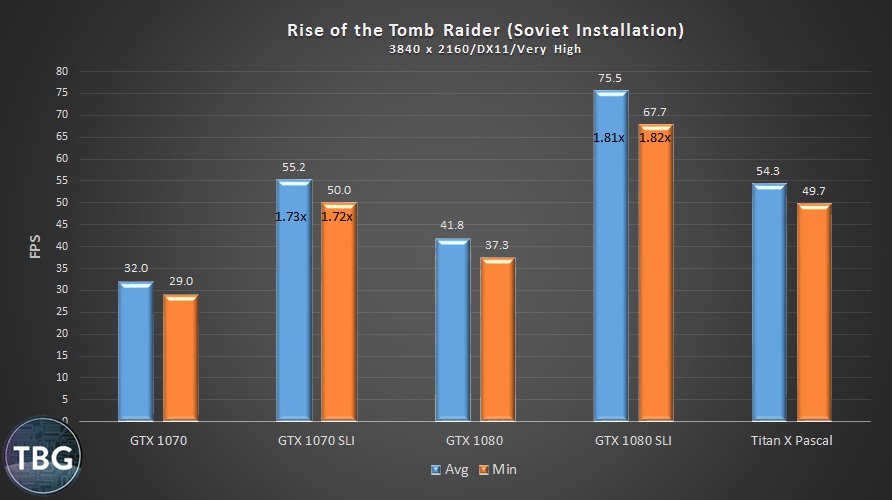

Rise of the Tomb Raider

With scaling just over 70%, our GTX 1070 SLI setup is able to land slightly ahead of the Titan X Pascal, while the GTX 1080 SLI setup is 39% ahead of its similar-priced cousin. That being said, the Titan is completely playable in this game at 4K, although judicious use of overclocking would help to eliminate tearing by bringing the game closer to 60fps and a V-Sync lock. Of course, that comes with its fair share of noise, taking away from the sublime experience that this game can provide.

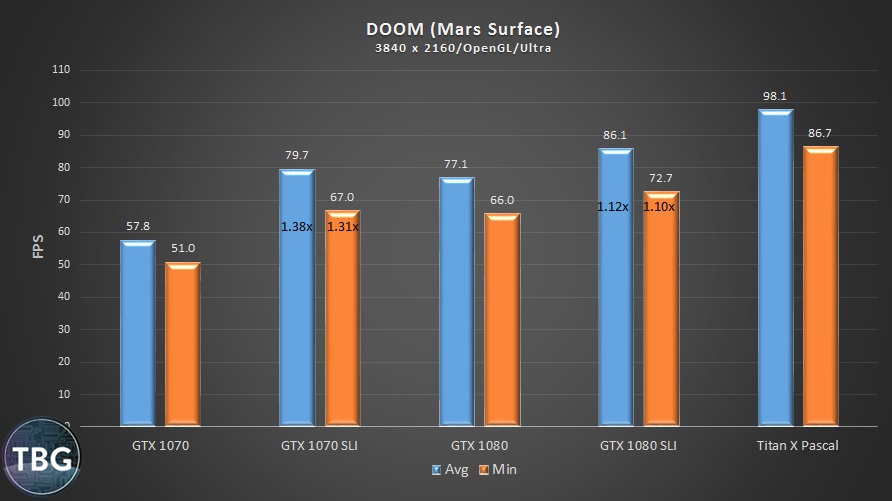

DOOM

Oh, goodness, the downside of SLI is painfully obvious here. Sometimes it just doesn’t scale well at all. And take note: we chose to bench with DOOM’s OpenGL API, rather than the more advanced Vulkan API, because SLI doesn’t work at all in Vulkan. Sadly, that means SLI users will actually have a slightly better experience chugging along with poor scaling and an outdated API than they will using the cutting-edge Vulkan API. We tested this and can confirm that for the GTX 1070 SLI setup in particular, OpenGL is the way to go, despite its inefficiency in harnessing cores (it actually doesn’t even work correctly on our eight-core processor, providing lower performance than it does when we disable four of the CPU’s cores!).

Frankly, if DOOM is your game, and you’re considering upgrading to SLI to improve your performance, we recommend that you just don’t do it!

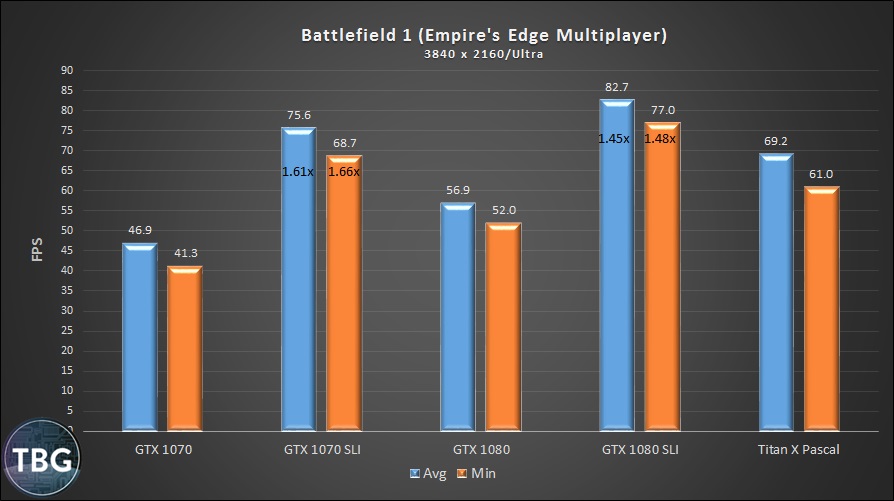

Battlefield 1

Competitive gamers take note: these benchmarks were conducted on the most graphically-intense multi-player map in Battlefield 1, and represent the actual, real-world experience of playing the game, unlike the single-player benchmarks you’ll see everywhere else. And that means that collecting 4K benchmarks on our single GTX 1070 was an exercise in frustration, as the game simply does not play well at 45fps. Luckily, despite less-than-stellar scaling, SLI is able to save the day, meaning that 1070 SLI provides great framerates, besting the Titan X Pascal by about 10%. Not surprisingly, the GTX 1080 SLI setup is even further ahead, providing the best overall experience. That’s despite the fact that, as in DOOM, GTX 1080 SLI scaling is quite a bit worse than GTX 1070 SLI scaling. These games probably take advantage of certain assets of the 1070 GPU that don’t change when moving to the GTX 1080, most likely the 64 ROPs that both GPUs have in common.

Now, here’s the sad thing about BF1: after a successful release with regards to SLI in October 2016, things went downhill fast, as a game patch released in November essentially broke SLI, causing intense flickering in menus and sometimes in the game as well. Thankfully, DICE issued a new patch on December 13, 2016, right in the middle of our testing, which fixed SLI, allowing us to include the game in our benchmarks. The only problem: overall performance with this patch is far lower than it was back when the game was released. On the exact same map, with the exact same test system, our GTX 1080, 1080 SLI, and Titan cards benchmarked 15-20% higher upon release than they did in the table above. All we can say is we hope DICE is able to sort things out in future updates so that that both performance and game quality are maximized.

One other note about Battlefield 1: it’s intensely multi-threaded, and Hyperthreading and more cores make a world of difference in this game. We had both working in our favor with our Core i7-6900K benchmarking system. Don’t expect to have the same experience on a Core i5-6600K or similar class of processor. Serious GPUs demand a serious CPU in this game, and the Core i7-6700K is the minimum we recommend.

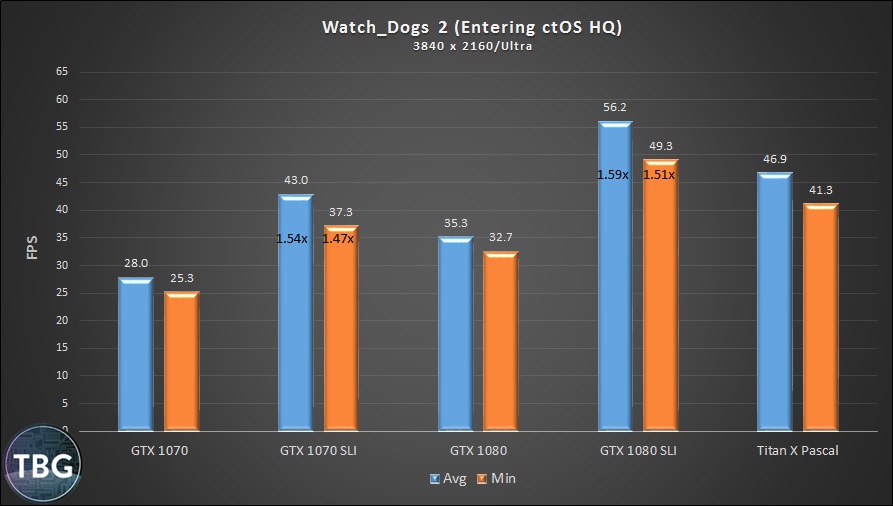

Watch_Dogs 2

Released just weeks before we went to press, Watch_Dogs 2 not surprisingly offers pretty poor SLI scaling, a common trait with newly-released games, even those sponsored by Nvidia, like this one. It’s also an absolute bear to run, bringing even the Titan X Pascal well under 50fps, the only game in our suite to do so. Some of this may be due to a lack of optimization, but there’s no doubt that the graphics in Watch_Dogs 2 are pretty impressive. Note that we tested with the optional high-resolution texture pack installed.

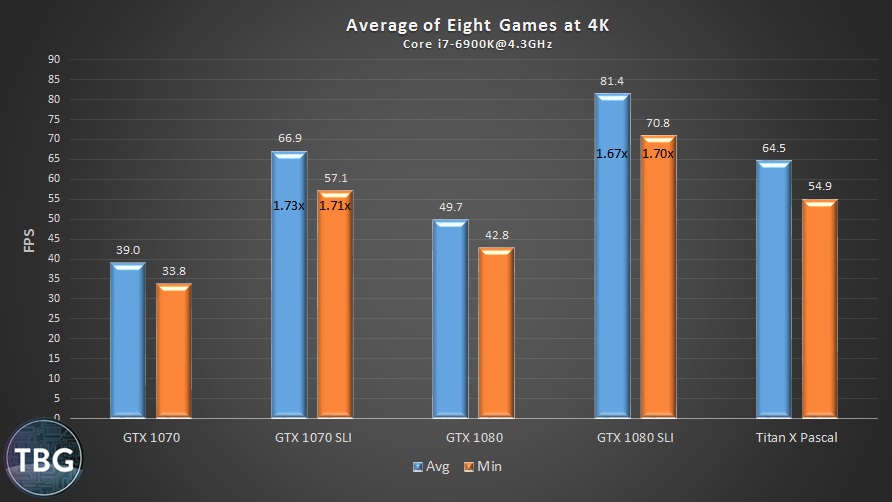

Average Results

As shown on the last three pages, some games run great on SLI, and others do not. But if we only picked games that scaled magnificently with SLI or only picked games with terrible scaling, we wouldn’t be painting an honest picture. Instead we picked eight games that we think represent the best of PC gaming, several of them winners of 2015 Game of the Year honors, and several more up for 2016 Game of the Year honors. And while some do far better than others when using SLI, unless you only play one or two specific games, the best way to judge SLI is by looking at the average across this benchmark suite. As can be seen below, on average, SLI actually does pretty well for itself.

Providing average scaling of around 70%, SLI is able to handily defeat the Titan X Pascal whether judging it based on price or performance. With the GTX 1070 SLI setup, you’re paying $800-$900 for performance equivalent to the $1,200 Titan X Pascal. Likewise, with the GTX 1080 SLI setup, you’re paying around $1,300 for a solution that’s 26% faster than the Titan on average, with only a single game in which it loses (DOOM). Now, please keep in mind that we utilized a benchmarking system that maximized SLI’s chances of success, featuring not only dual PCIe 3.0 x16 lanes (courtesy of its X99-based motherboard), but also an aftermarket high-bandwidth SLI bridge. You really need to factor in the cost of these components before drawing firm conclusions regarding the performance per dollar SLI provides. As we’ve shown in previous articles, both features boost performance by about 5-7%. If we had tested with neither feature, the 1070 SLI setup would most definitely have fallen behind the Titan X Pascal, and the 1080 SLI setup would lose some of its luster as well.

Additionally, as discussed on previous pages, there’s more to SLI than averages. In some of our games, SLI scales poorly from the start, with proper profiles added only much later (The Witcher 3 comes to mind), breaks with subsequent game patches (Battlefield 1), or just doesn’t work at all (as in DOOM using the Vulkan API). The Titan X Pascal delivers its absolute best in every game on day one, providing at least some justification for its premium price.

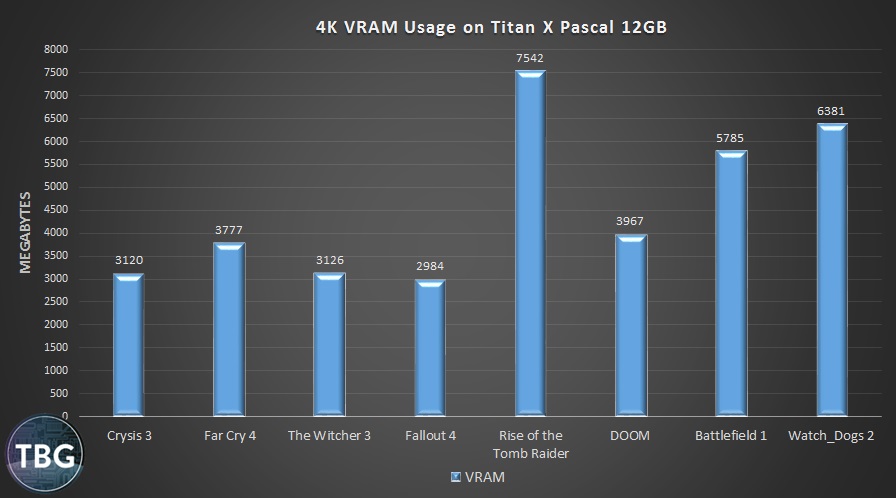

VRAM Usage

While it’s gone unmentioned thus far, the Titan X Pascal has one major advantage over running two lesser cards in SLI: 12GB of VRAM vs. 8GB. But does this spec actually matter? In the graph above, we’ve arranged the games in the same order that we presented them in the article, which is chronological by release date. As is fairly clear, VRAM usage stayed fairly consistent during the period of time when 4GB video cards were the norm (2013-2015), but has gone up dramatically in 2016, when 8GB cards entered the mainstream (primarily in the form of the GTX 1070 and the much less expensive AMD Radeon RX 480 8GB). Ultimately, though, we think the massive increase in VRAM usage might have resulted from a different market all together, specifically the gaming console market, where both the Xbox One and Playstation 4 have the equivalent of 8GB of VRAM. With most games being developed for consoles first, we expect that this pattern won’t be reversing any time soon.

By the way, we also checked VRAM usage in a number of games on the GTX 1070 SLI setup, and found that on average it used 10-20% less VRAM than the Titan X Pascal. This occurred despite the fact that the GTX 1070 has 8GB of VRAM, which would be able to support the very same usage we saw on the Titan X Pascal, as all games stayed under 8GB. This pattern suggests that there may be a bit of optional buffering going on that isn’t necessarily critical to performance, although it’s impossible to know exactly what’s going on behind the scenes. Suffice it to say, we think 8GB is probably the minimum amount you’d want on a high-end card today, and it’s clearly the area where cards become obsolete the fastest. Short-changing the VRAM allocation on high-end cards can be a big problem in terms of future-proofing, and we have a feeling Nvidia has learned its lesson here (with 2013’s GeForce GTX 780 Ti 3GB being the most obvious example of a life cut short by insufficient VRAM).

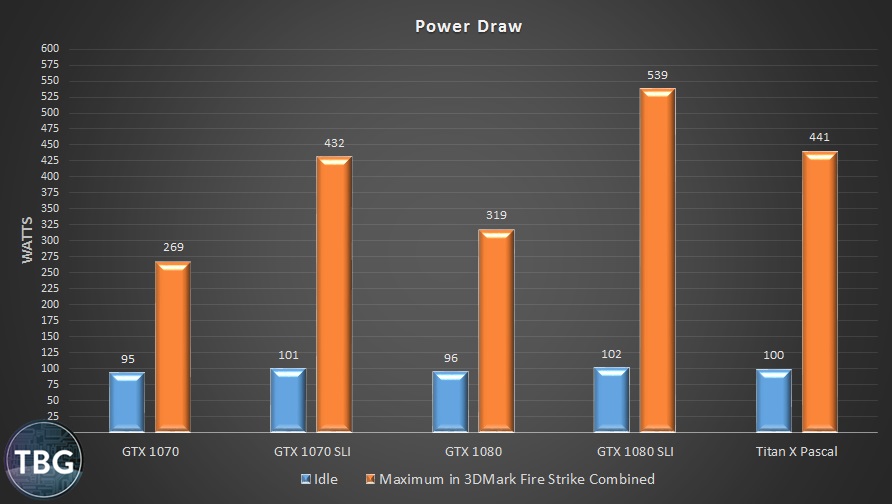

Power Use

These results really surprised us. In the past, going SLI meant sucking down huge amounts of power, far more than you would with a single card, in turn giving up on a whole lot of efficiency. And yet today, the mid-range Pascal architecture embodied by the GTX 1070 is so amazingly efficient that teaming up two of these cards together uses less power than a single Titan X Pascal, despite offering more performance on average.

While it might be tempting to place the blame on the Titan for being inefficient, that’s really not a fair characterization. Compared to a single GTX 1080, the Titan is 30% faster in our benchmarks and uses 38% more power. This power increase is reasonable, in our opinion. What isn’t really reasonable, however, is the noise the Titan’s cooler makes in attempting to exhaust all of its waste heat. We know why Nvidia continues to equip its reference cards with these coolers: they are guaranteed to work, even in poorly-designed OEM systems. But the fact that the Titan X Pascal is only available in reference form, the first time any Nvidia card has been restricted in this way, is not a step in the right direction. Indeed, in addition to be faster, more efficient, and far cheaper than than the Titan, our GTX 1070 SLI duo was quieter as well. That’s win-win-win-win for the 1070, and a whole lot of losing for the Titan. It’s a prestige part, and Nvidia no doubt sold many of them, all direct from the Nvidia store, generating huge profits. It’s an impressive piece of hardware, but it’s not flawless, and Nvidia’s bravado casts a pall over what could have been a knock-out success.

As long as we’re discussing cooling and noise, we might as well mention something that many enthusiasts probably don’t want to hear: running matching open-air cards together in SLI simply isn’t ideal. Our GTX 1070 SLI system used an unmatched pair of cards, the upper card open-air and the lower card blower-style, and the cards ran at nearly the same temperature throughout our testing (averaging around 70-74 °C). Our GTX 1080 SLI system, on the other hand, featured matched EVGA GeForce GTX 1080 SC cards utilizing the ACX 3.0 open-air cooler. The upper card in this tandem routinely ran 10-12 °C hotter than the lower card, meaning it would occasionally bounce off the 83 °C thermal-limiting threshold. This also caused the upper card to run its fans much louder than the lower card.

While not quite as aesthetically appealing, and requiring some minor tweaking to run at equal clocks, unmatched cards are probably a better overall solution for enthusiasts really into optimizing performance metrics. The best solution, of course, is dual liquid-cooled EVGA GeForce GTX 1070 Hybrid or GeForce GTX 1080 Hybrid cards, but this type of setup comes with its own drawbacks: a significant price premium, a penalty in terms of ease of installation, and higher idle noise. In the end, SLI users are always going to have to make a few trade-offs… there’s no two ways about it!

Conclusion

Well, anyone looking for us to pick an absolute winner here is going to be disappointed; both SLI and the Titan X Pascal have their place in the pantheon of PC gaming, and Nvidia should be commended for offering enthusiasts multiple options when it comes to achieving extreme performance. The one setup that truly stands out is dual GTX 1070 cards in SLI, based on performance, price, and efficiency.

On the other hand, as great as it is, we can’t help but think that consumers aren’t quite getting their money’s worth with the Titan X Pascal. Not only does it command a premium price, but it’s also equipped with a cooler that isn’t quite ideal for its power and heat output. In the past, Titan cards were at least available with liquid cooling, which made them very appealing. In refusing to allow board partners access to the Titan X Pascal and burdening it with a cooler best-suited to cards selling for half its price, Nvidia hasn’t done consumers any favors. That being said, the card is a technological tour de force, and by putting it in the hands of gamers months ahead of schedule, Nvidia truly advanced the state of PC gaming.

The same can be said of Nvidia’s efforts to further refine its implementation of SLI, with the new high-bandwidth SLI connector providing significant, measurable benefits to performance. While the $40 cost may seem excessive, there’s no other component that provides so much return on investment in the PC gaming world. Want 10% more gaming performance from your CPU, RAM, or even your GPU? Good luck getting that for $40, at least at the high end! And given the boost provided by high-bandwidth SLI, dual GTX 1080 cards end up with a decisive victory in our showdown, offering far more performance than a Titan X Pascal can deliver, despite a very similar price.

All told, both Titan X Pascal and modern-day SLI make playable 4K framerates the rule rather than the exception. But alas, TBG’s motto is “bringing tech to light,” and sometimes that means shining a harsh light on chasing the limits of technology for technology’s sake. As we’ve shown, you can certainly game at 4K with SLI or the Titan X Pascal, and enthusiasts no doubt consider 4K benchmarks to be the ultimate test of a modern gaming PC’s mettle. The problem inherent in this approach is that 4K gaming may in fact not be the end-all-and-be-all of modern PC gaming. That’s because 4K monitors are currently limited to 60Hz, and throughout our testing, it was painfully obvious that our overall gaming experience on our 27″ 4K monitor wasn’t nearly as good as it is on the Acer 27″ 1440p 144Hz G-Sync monitor we typically test with. Yes, the added resolution is nice when you’re sitting still admiring the scenery, but once the action starts up, the tearing, lag, and just plain sluggishness of a 60Hz monitor rears its ugly head.

And so, we end our 4K Gaming Showdown with the most-unexpected of conclusions: we strongly recommend gamers choose a lower-resolution, high-refresh-rate monitor, preferably with G-Sync, over any 4K 60Hz monitor, even G-Sync models. Yes, we know many of our readers have purchased and enjoyed using 4K monitors for gaming, but as with cameras, it’s about more than megapixels. We believe true gaming bliss is gaming at 100-120fps with a GTX 1070 SLI, GTX 1080 SLI, or the Titan X Pascal system, a target they can all easily achieve in just about every game using 2560 x 1440 monitors like the Asus PG279Q ROG Swift, and even ultra-wide 3440 x 1440 monitors like the Acer X34 Predator. In other words, we still think these muscle-bound GPU setups are great to have… we’re just not sold on 4K monitors, yet. When DisplayPort 1.4 finally makes its way to monitors and video cards, opening up the possibility of 4K/120Hz gaming, we’ll be first in line to make the leap, and by then we’ll certainly have the GPU power to enjoy it!

For our latest monitor recommendations, check out our Monitor Buyer’s Guide, updated quarterly. And as always, you can see all of our current PC component recommendations in our Do-It-Yourself PC Buyer’s Guides, which help gamers squeeze every last bit of performance out of their systems, regardless of budget!