The Quad vs. Hex Showdown: Core i7-4790K vs. 5820K in Games

The question of the effect of core count on games is one we have looked at many times before in our Gamer’s Bench, but so far with a focus on dual-cores, quad-cores, and Hyperthreaded virtual cores. We’ve previously found that quad-cores offer significant advantages over dual-cores, and that Hyperthreading can help in certain games, especially on dual-core processors. These results begged an obvious question: what if you have more than six cores, along with Hyperthreading? Would that be the ultimate gaming setup? Well, we’re here to answer that question, as we pit our six-core i7-5820K processor against an i7-4790K.

It’s an interesting matchup in more than one way. Both chips are based on the Haswell microarchitecture (the 5820K is technically “Haswell-E”), meaning each of their cores is essentially identical. The only major difference between the two designs is that the 5820K has 2.5MB of cache per core, while the 4790K has 2MB of cache per core. Both feature Hyperthreading, which brings the 5820K up to 12 total logical cores and the 4790K up to 8 cores. That being said, the 4790K is clocked at 4.0 GHz out of the box, with Turboboost to 4.4GHz, while the 5820K is clocked at 3.3GHz with Turboboost to 3.7GHz. To even the playing field, we overclocked both processors to 4.4GHz for our benchmark tests.

Of course, there’s one other major difference between the two processors, and that is the platform. The 4790K uses the Z97 platform, while the 5820K uses the X99 platform, the latter which features a more robust quad-channel DDR4-based memory architecture. One would think that the added memory bandwidth, in combination with the extra cache, should be enough to push the 5820K ahead of the 4790K in all gaming scenarios, even where extra cores aren’t helpful. We shall soon see if that proves to be true!

Test Setup

We performed our quad-core benchmarks on the following test system:

- CPU: Intel Core i7-4790K (overclocked to 4.4GHz)

- Motherboard: ASRock Z97 Extreme 4

- Video Card #1: Sapphire Radeon R9 290 4GB (representing mid-range)

- Video Card #2: EVGA GeForce GTX 980 Ti 6GB (representing high-end)

- Memory: 4x4GB G.Skill DDR3-2400 @ 2133MHz

- Solid-State Drive: Crucial MX100 512GB

- Power Supply: EVGA Supernova G2 850W

- Case: NZXT S340

- CPU Cooler: Noctua NH-U12S

- Operating System: Windows 10

Our hex-core test system consisted of the following components:

- CPU: Intel Core i7-5820K (overclocked to 4.4GHz)

- Motherboard: Asus X99-Pro/USB3.1

- Video Card #1: Sapphire Radeon R9 290 4GB (representing mid-range)

- Video Card #2: EVGA GeForce GTX 980 Ti 6GB (representing high-end)

- RAM: G.Skill 4x4GB Ripjaws4 DDR4-3000 @ 2666MHz

- SSD #1: Samsung SM951 M.2 256GB

- SSD #2: 2x Samsung 850 Evo 500GB in RAID0

- Power Supply: EVGA Supernova 1000 PS

- Case: Corsair Carbide 500R

- CPU Cooler: Corsair Hydro H100i

- Operating System: Windows 10

We ran all tests at a resolution of 1920 x 1080, except where specified, and our video cards were set to reference speeds, which translates to 947MHz/5000MHz for the Radeon R9 290 and 1000/7000 for the GeForce GTX 980 Ti. Note that due to Nvidia’s GPU Boost 2.0 feature, the 980 Ti operated at 1202MHz/7000MHz at almost all times. The drivers utilized for the Radeon card were Catalyst 15.7.1 for Windows 10, and the drivers utilized for the GeForce card were GeForce 353.62 for Windows 10. We used two different cards not just to speak to different segments of the gaming market, but also to highlight whether AMD or Nvidia might benefit more from the extra cores.

We’ll be providing results for one synthetic benchmark and six games, as follows:

- 3DMark Fire Strike

- Grid 2

- Tomb Raider

- Crysis 3

- Battlefield 4

- Thief

- Far Cry 4

By choosing a variety of both older and newer games, we hope to find out whether one or the other is more dependent on extra cores, and perhaps whether specific game engines would benefit more from more cores, if at all.

All righty, then, hopefully we’ve made clear how we performed our tests. There’s lots of data to go through, but let’s start off easy, with an exploration of our 3DMark results, shall we?

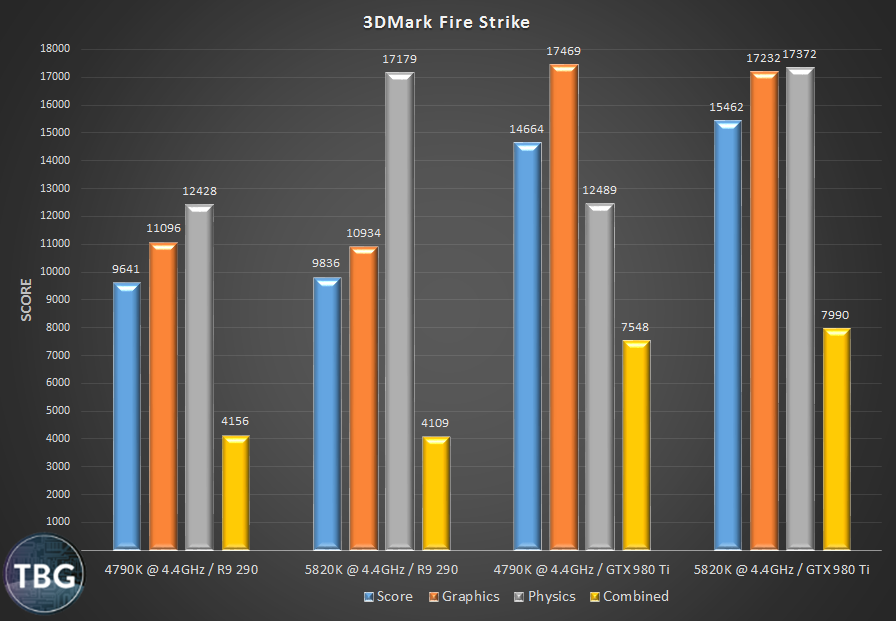

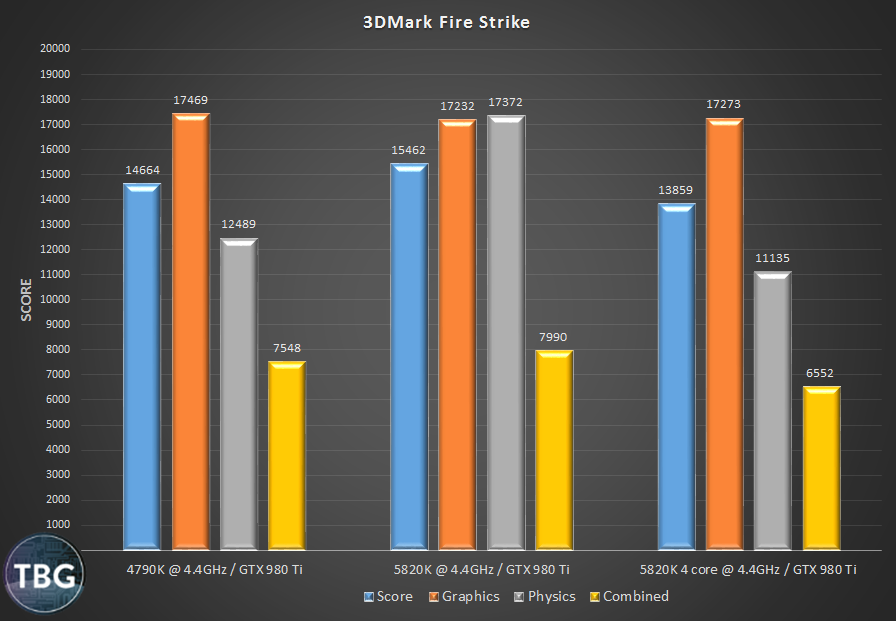

3DMark Fire Strike

Yes, we know, 3DMark isn’t a game. But it’s a fairly popular benchmark, and it just so happens to have separate Graphics and Physics tests that focus on video card and CPU performance, respectively. And that’s critical here, because we want to know whether the extra cores of the 5820K are having an effect. Well, right off the bat, we see that the 5820K-based systems have higher overall scores, along with much higher Physics computation scores. But delve a bit more deeply into the data and you’ll see that something’s amiss here. In terms of the Graphics score, which should be GPU-dependent, both of our video cards perform worse when paired with a 5820K. We’re only talking a 1% difference here, so it’s barely significant, but it’s not quite what you’d predict.

Based on these results, we might expect that actual game benchmarks will run slower on the 5820K. Next, we’ll see if the 3DMark Graphics Score was just a fluke…

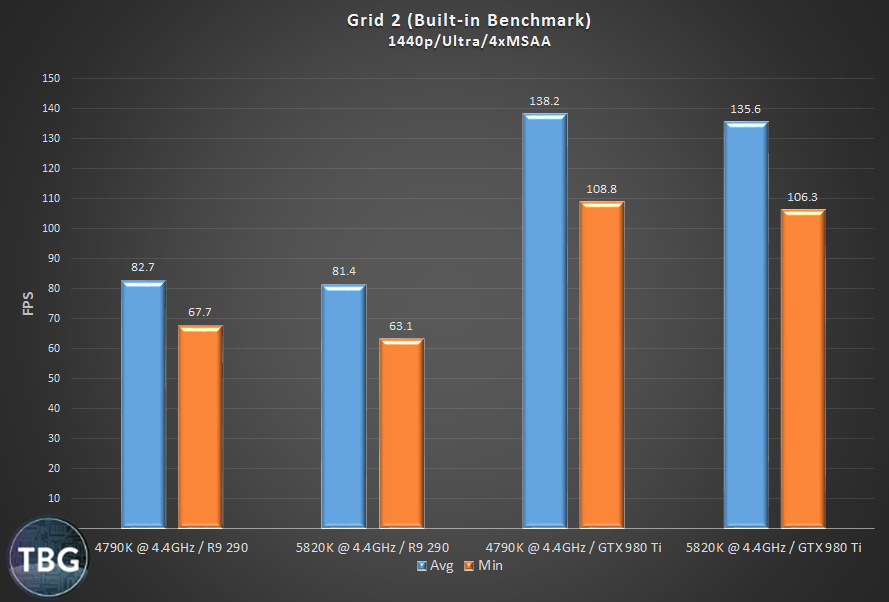

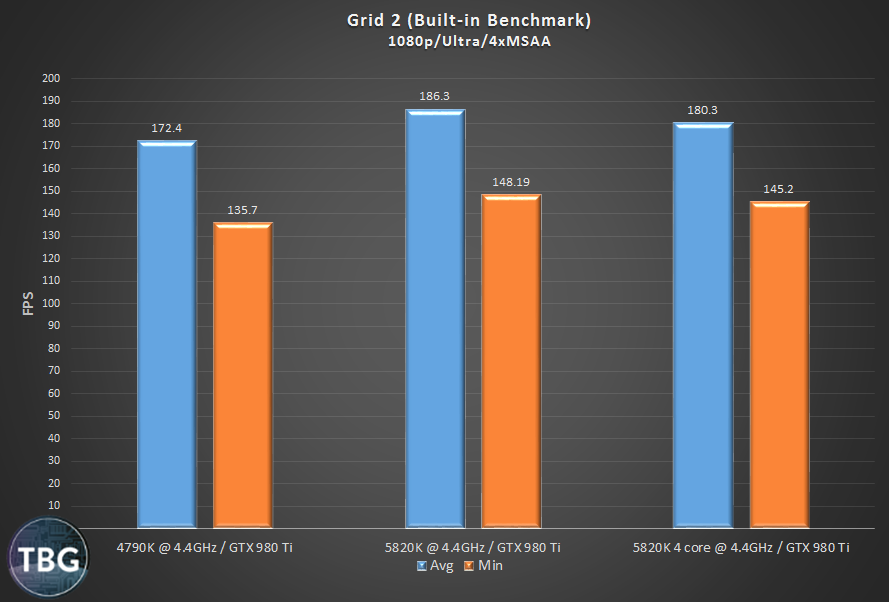

Grid 2

Grid 2, being based on an older graphics engine, exhibits framerates that are sky high on just about any modern system. In order to bring the Grid 2 results more in line with the other games tested for this analysis, we ran Grid 2 at a more taxing 2560 x 1440 resolution. All other games were run at 1920 x 1080, but as you’ll see, Grid 2 still had some of the highest framerates. Obviously, when framerates get this high, you tend to get more CPU bound. And that should in turn lead to a victory for the Core i7-5820K. But this first game test seems to agree with 3DMark, with the 4790K-based system exhibiting a performance advantage of just under 2%.

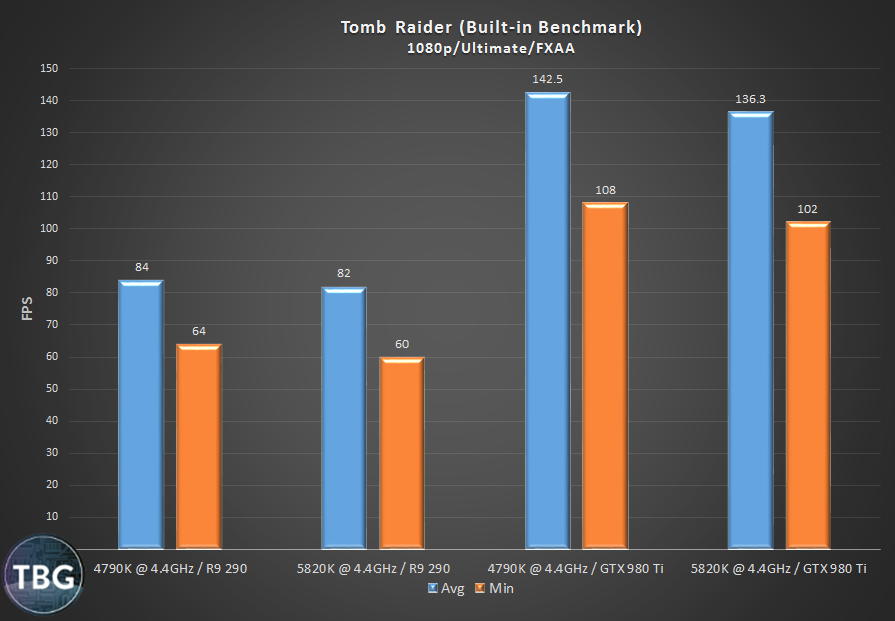

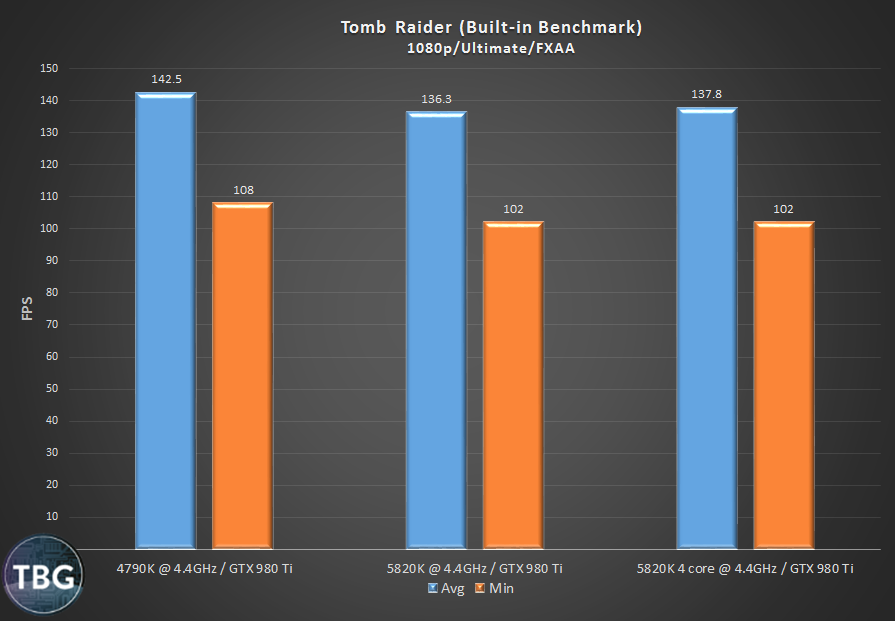

Tomb Raider

Ah, Tomb Raider. Fun to play, easy to benchmark with. That’s because it has a nice built-in test sequence which is almost completley GPU bound. And yet again, things just aren’t going right for our 5820K-based system. In a test that should have both system performing identically due to very low CPU load, the 4790K is again ahead, this time by a more commanding 2.5% using the Radeon R9 290 and 5% using the GTX 980 Ti. From the looks of it, the 4790K appears to be unleashing more performance from our GPUs, especially the ultra-high-end GTX 980 Ti.

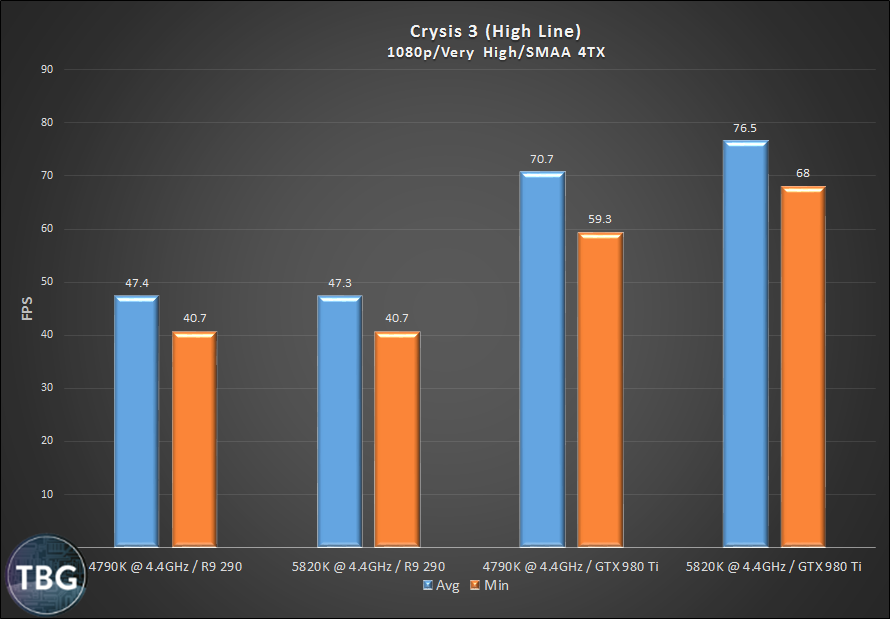

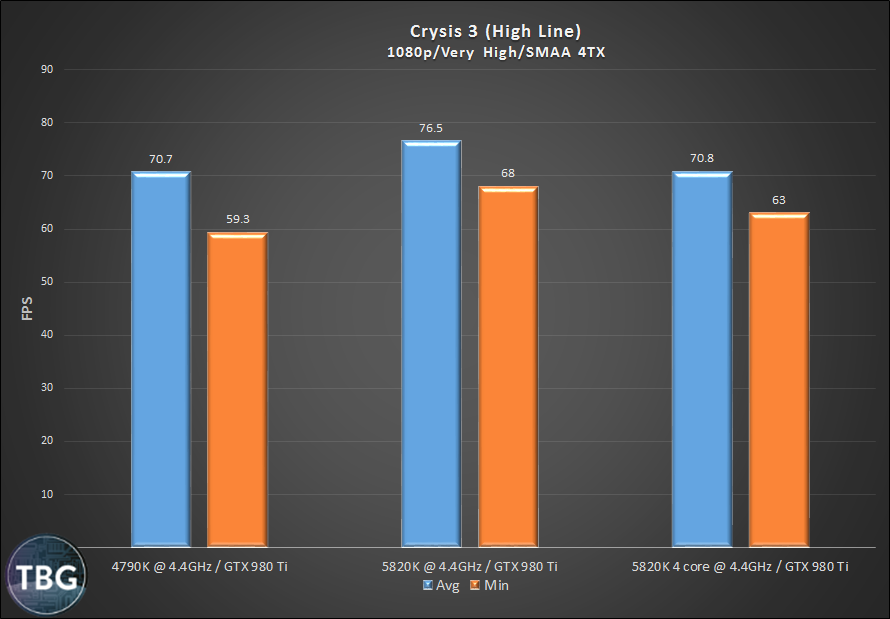

Crysis 3

This game is surprisingly demanding when it comes to CPU performance, but it also loves GPU power. What does that mean for its performance under in our tests? Well, the 5820K surges out in front of the 4790K, at least when paired with the high-performance GTX 980 Ti. Using the less-powerful Radeon R9 290, performance is clearly GPU-bottlenecked. This is most definitely one game where having more CPU power at your disposal comes in handy if you have a high-end video card. And it’s not just the average that gets a boost; the minimum framerate on the GTX 980 Ti is an impressive 15% higher when paired with the 5820K. That’s a difference you can definitely feel.

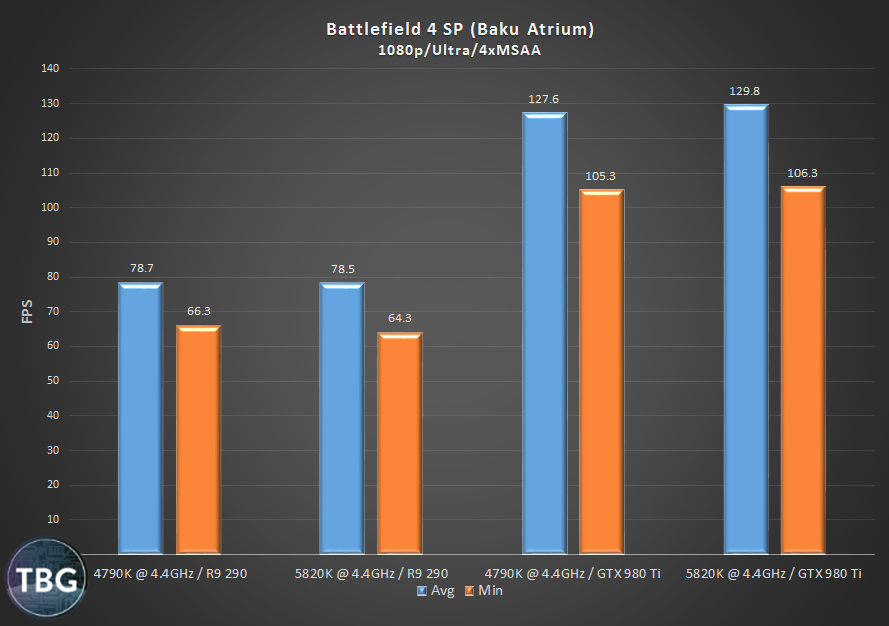

Battlefield 4

Battlefield 4 can be somewhat CPU-bound, even in the single-player mode that we tested here. So we expected to see the 5820K to give the game a boost here. Indeed, with the GTX 980 Ti, performance is about 2% higher, but as with the Crysis 3 results, Battlefield 4 seems to get stuck when using the Radeon R9 290, meaning that extra CPU power just doesn’t help.

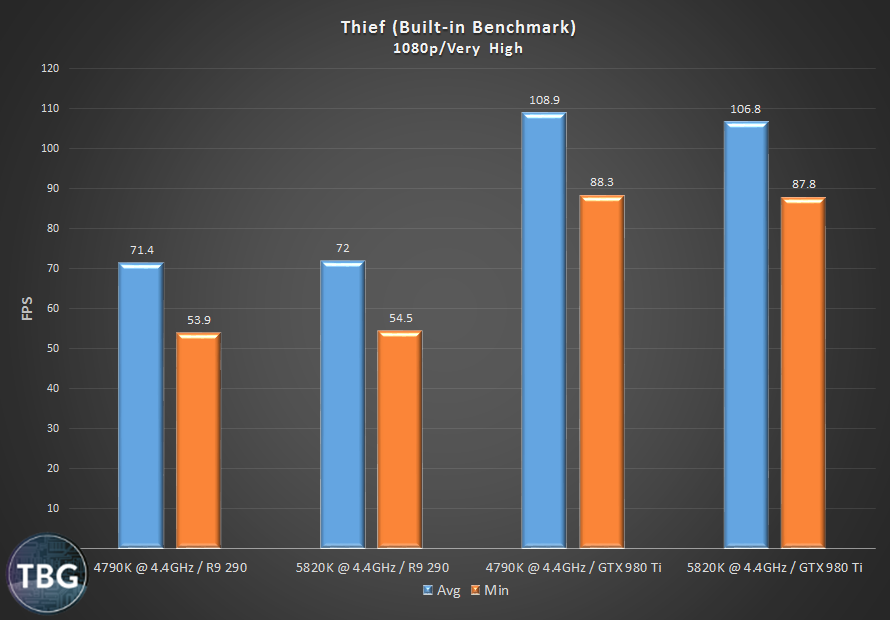

Thief

The results in this game are really, really close, with a split-decision based on the video card in use. It’s a game that we’d expect to be essentially GPU-bound, but in fact the Radeon R9 290 performs better when teamed up with the Core i7-5820K, while the GeForce GTX 980 Ti performs worse. Tthat is most definitely hard to explain! We’d guess that the Radeon drivers in this game may impose an additional CPU overhead, thereby responding positively to the presence of extra cores and overcoming the drag resulting from the use of the 5820K-based system.

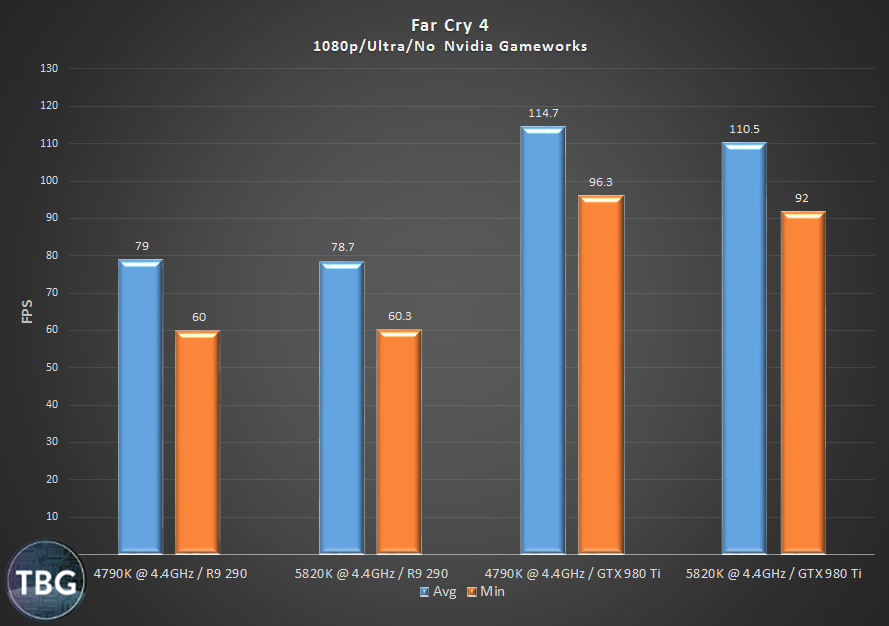

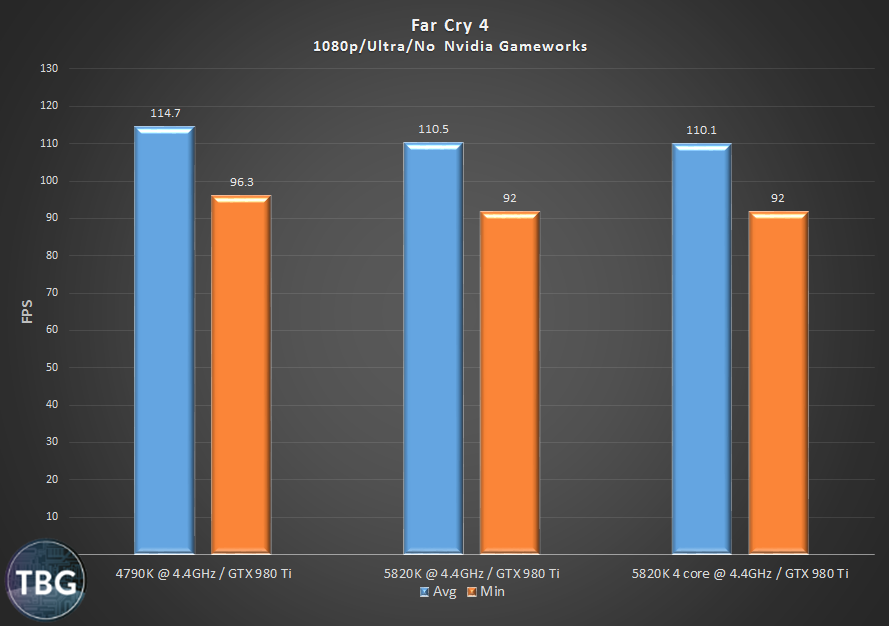

Far Cry 4

We end with one of 2015’s biggest hits, Far Cry 4. Being a relatively-modern game engine, we thought it might like not just extra GPU power but also extra CPU power as well. Alas, it was not to be, as the 5820K-based system again trailed the 4790K-based system, at least when paired with the powerful GTX 980 Ti video card.

The Theoretical 5820K Quad-Core

Truth be told, we were floored by the results of our benchmarks. They simply didn’t make sense. We double- and triple-checked them, confirming that our RAM was operating in quad-channel mode, that the PCIe slot was operating at the full PCIe 3.0 x16 speed, and that the CPUs truly were operating at the same 4.4GHz frequency. We even performed some additional testing setting the cache to the same speeds (the 4790K’s cache is faster by default). Alas, the 5820K just couldn’t catch a break. In almost all of our tests, it was slower than the 4790K using the same video cards.

Remaining unsatisfied with our results, we decided to approach the test in another way, namely by disabling cores on the 5820K to emulate a 4790K. Surely, if there were a bottleneck imposed by the X99-based system, taking away its core advantage would make this bottleneck obvious. Below we provide data for 3DMark, along with the two games that performed the best on the hex-core 5820K and the two games that performed the worst, using just our ultra-high-end GeForce GTX 980 Ti to really push these CPUs to their breaking points. And as you’ll see, the results just aren’t that straight-forward.

3DMark Fire Strike

From the get-go, things are looking dire for the handicapped 5820K, shown on the right side of our graph. Despite having the same theoretical processing power as the 4790K, along with more memory bandwidth and more cache, it performs much, much worse. Sure, in the GPU-bound Graphics test it’s about even, but it falls nearly 13% behind in the Physics test, and is also soundly beaten in the Combined test. Something just isn’t right here!

Grid 2

For these tests, we upped the CPU ante by running at a resolution of 1080p, and so these results aren’t direclty comparable to our Grid 2 a few pages back, which looked at a 1440p resolution. But we generally expected the same pattern: performance would be slightly better on the 4790K-based system, right? Well, our test system threw more surprises our way, as the 5820K-based system was significantly faster, not just with all six cores enabled, but also when running on just four cores. Oh boy, this is getting complicated!

Tomb Raider

Well, another slightly strange result here with Tomb Raider, where the 5820K performs ever-so-slightly better when only four of its cores are enabled, but it’s still not catching the 4790K. Weird, just weird.

Crysis 3

We know from plenty of previous testing, as well as the results a few pages back, that this game is seriously CPU-bound with a high-end video card. So six cores wins the day. But taking away two of the 5820K’s cores doesn’t turn it into a 4790K, apparently, as it still exhibits significantly better minimums than its nearly identical cousin. The most obvious explanation here is that either the extra cache or extra memory bandwidth offered by the 5820K/X99 duo provides a boost even when you don’t have the extra cores of the 5820K at your disposal.

Far Cry 4

And finally, we conclude with a total lack of drama. No, disabling cores on the 5820K doesn’t magically bring it up to par with the 4790K at the same clock speed, but nor does it hurt performance. This game is clearly held back by something in the X99 platform that extra cores cannot remedy.

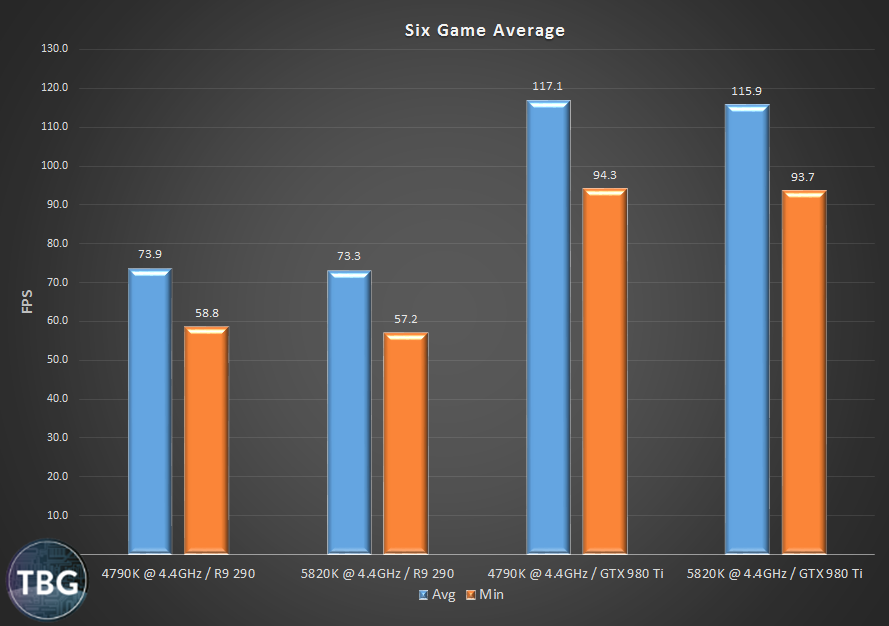

Conclusion

Does having more cores perform miracles? Well, not quite. In fact, only in a handful of the games we tested does it provide any boost at all, with the biggest boost being in Crysis 3. And that speaks to the overall “core debate” that enthusiasts have been engaged in ever since the first dual-core appeared on the market more than a decade ago. Unless games are designed to take advantage of extra cores, those cores do no good at all. But taking a look at our six-game average shown below, one is almost forced to conclude that the extra cores somehow act as extra baggage, exacting an overall performance penalty.

There are a few other angles we can take on the data we collected beyond just averages. First, while results varied by game, overall, both the Radeon and GeForce card were negatively affected by being paired with the 5820K. Thus, that negates one of our original hypotheses: that AMD driver overhead would allow the Radeon to unleash hidden potential when paired with more cores. Second, both averages and minimums are negatively impacted, meaning whatever is holding back the 5820K-based system is a broad-based effect. And there’s one last piece of the puzzle we haven’t yet touched upon, namely power use. We found that our 5820K-based system drew 75W at idle, versus just 41W for our 4790K-based system, while drawing 418W under a 3DMark combined load, versus 359W for the 4790K-based system. That alone may be a tie-breaker for many people, especially the idle numbers.

Overall, then, this is what we’d suggest to potential PC builders: unless you’re looking to gain additional future-proofing for games coded a la Crysis 3, a fast quad-core is really what you’re after. And that’s not all. With the release of the faster Core i7-6700K and the DDR4-based Z170 platform, things could get nasty for the Core i7-5820K. That’s because the Skylake-based 6700K offers about 10% higher performance than the Haswell design at the same core clock. Also looming on the horizon are DirectX 12-based games, which will be coded much more efficiently to reduce CPU overhead. This could work either in favor of lower core counts, or perhaps in favor of higher core counts if developers use that increased efficiency to load on extra processing effects.

There is a caveat to all of this, however, and that is the dual-GPU scenario. If you’re going to go with two high-end cards in Crossfire or SLI, the extra cores offered by the 5820K and its Haswell-E brethren the Core i7-5930K and Core i7-5960X, along with their greater number of PCIe lanes, will more than even the odds. The more GPU power you throw at a game, the more the CPU will struggle to keep up, so the extra power of the X99 platform pays off in dual-card scenarios.

Need more advice? Well, for our take on the best overall system builds, check out our TBG Do-It-Yourself Buyer’s Guides, which are updated on a monthly basis.